Unlocking the Power of Data Annotation: 7 Essential Practices for Successful ML Deployment

When it comes to deploying machine learning (ML) models, many data science teams find themselves stuck in the development phase. According to Gartner, nearly 80% of ML projects never make it into production. Even for those that do, only about 60% generate tangible business value.

The issue rarely lies in the model architecture itself. With the rise of standardized algorithms, building models has become more accessible. The true challenge lies in crafting a high-quality dataset during the training phase.

Just because a model performs well in testing doesn’t mean it will succeed in the real world. The quality of the training data plays a critical role. Poorly labeled, biased, or inconsistent data can doom even the most sophisticated models. Common hurdles during the data training stage include:

✅ Sourcing relevant and diverse data

✅ Structuring comprehensive datasets

✅ Defining clear project goals and oversight

✅ Streamlining workforce productivity

✅ Maintaining rigorous quality control

This blog explores key best practices for managing data annotation workflows so your ML models can move confidently from prototype to production and deliver measurable impact.

Understanding the Lifecycle of a Data Annotation Project

A well-managed annotation project typically follows these seven core phases:

1. Define Your Project Blueprint

Start by clearly outlining your project's objectives. What problem are you solving, and how will the annotations contribute to your solution? Define the types of data you’ll need, how to acquire it, the specific annotations required, and how those annotations will be used downstream.

It’s often more effective to run smaller, focused projects rather than one massive task. Simpler annotation assignments generally yield higher accuracy from annotators. Be sure to establish stakeholder roles and define communication protocols early to avoid bottlenecks.

2. Assemble a Diverse and Balanced Dataset

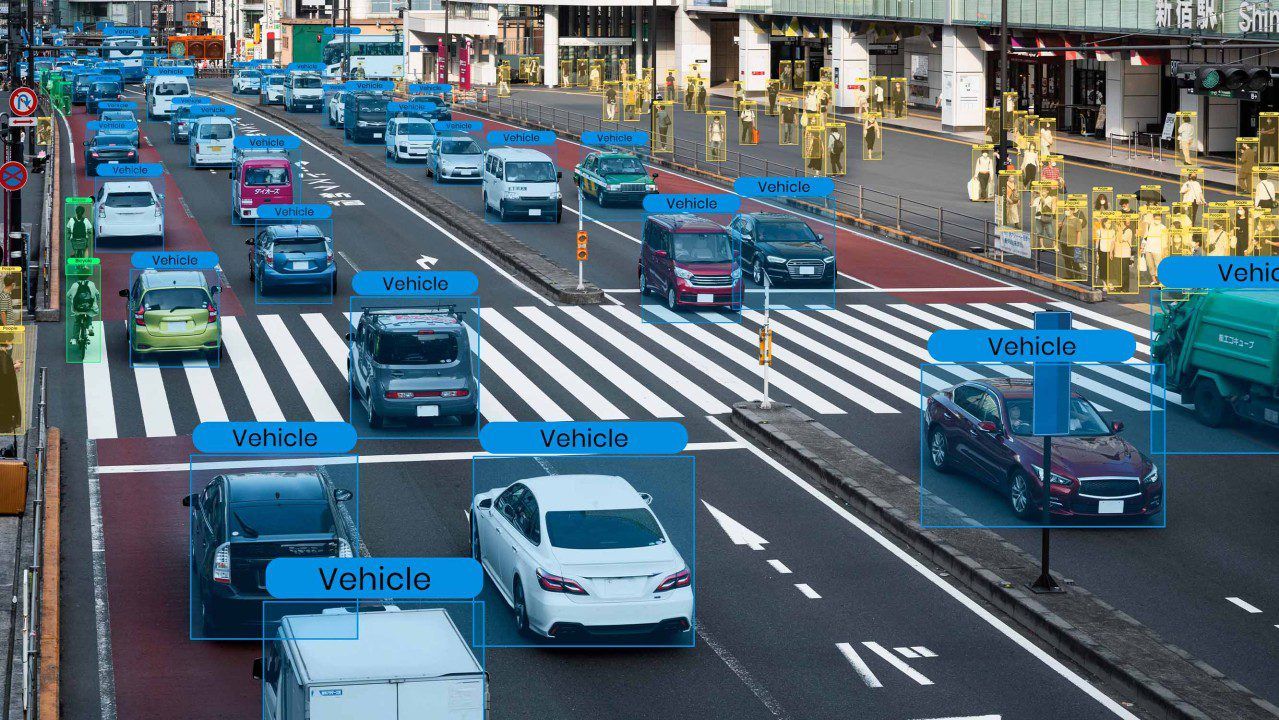

The quality of your data is more important than its quantity. Aim for variety in your dataset to reduce bias and improve model generalization. For instance, if your model is designed to detect pedestrians, include individuals of different body types, clothing, and lighting conditions—rainy mornings, sunny afternoons, and everything in between.

3. Select the Right Annotation Workforce

Annotation requires human input—but not just anyone will do. The complexity of your project should guide your choice of workforce. Crowdsourced annotators might be sufficient for general tasks, while specialized domains like medical imaging demand subject-matter experts (SMEs). These may come from within your organization or from professional annotation service providers.

4. Choose the Right Annotation Platform

Annotation tools are not created equal. The right platform should safeguard data privacy, integrate smoothly with your systems, and provide a user-friendly interface. Features that support quality checks, task assignment, and project management are crucial for maintaining efficiency.

5. Establish Clear Guidelines and Ongoing Feedback

Annotation quality directly affects model performance. Even a 10% dip in label accuracy can reduce model effectiveness by 2–5%. Provide detailed instructions and visual examples to ensure annotators are aligned. Update guidelines as the project evolves and maintain open communication channels so your workforce can raise questions or flag ambiguities.

6. Implement a Robust Quality Assurance Process

Quality assurance (QA) should begin on day one. Use QA methods like consensus labeling or honeypot tasks to measure accuracy. Rather than labeling more data, focus on annotating high-quality data with clear agreement among annotators. Use visualization tools within your platform to track annotation consistency and detect problems such as data imbalance, unclear instructions, or drift over time.

7. Embrace Iteration and Continuous Data Refinement

Your model’s performance hinges on the quality of its training data. After training and deployment, continuously monitor model output. A data-centric AI approach emphasizes improving data rather than endlessly tweaking the algorithm.

Regularly review where the model underperforms and adjust your dataset accordingly. You can iterate on:

✅ The size and composition of your dataset

✅ Annotation consensus thresholds

✅ Workforce guidelines and tools

Refining your dataset not only boosts model performance but also cuts costs by reducing redundant labeling—estimated to account for up to 40% of training data.

Build Smarter Models by Focusing on the Data

High-quality data is the foundation of any successful ML solution. By applying these seven best practices and adopting a data-centric mindset, you’ll position your organization to build models that are not just technically sound—but also valuable, efficient, and ready for real-world deployment.

🚀 Let’s Build Smarter AI Together

At CloudLytica, we help teams and enterprises deploy AI faster by providing high-quality, human-annotated data at scale. From image and video annotation to text and audio labeling, we bring the right people, tools, and processes to power your ML models.

Whether you're just starting out or refining your production pipeline, we're here to help you reduce annotation costs.

Ready to boost your model performance with smarter training data? Schedule a consultation or send an email to hello@cloudlytica.com today!

#DataAnnotation #MachineLearning #AI #TrainingData #DataLabeling #MLDeployment #DataCentricAI #AITraining #DataQuality #ComputerVision #NLP #AIModelDevelopment